How to classify text quickly with vllm and Mistral

Introduction

Many researchers have started to use ChatGPT (or similar LLMs) to extract information from text data. They are usually easy to use and people have open sourced their approach to working with them (see e.g. this repository by Prashant Grag). LLMs have revolutionized text classification tasks for sure. They offer great performance for difficult classification tasks compared to traditional methods like BERT or SentBERT, which have proven their strength e.g. with sentiment analysis. However, running inference with these models can be slow and expensive when dealing with large datasets. In this post, we’ll explore how to use vLLM, a open-source python-based inference engine, to run Mistral efficiently on multiple GPUs for super fast text classification.

For this tutorial, we will grab some comments on reddit and classify their sentiment. Of course you could classify harder things than sentiment, but for us it’s not about that, but about parallelizing and getting fast inference.

We’ll focus on a setup on a high-performance computing cluster running Red Hat Enterprise Linux (RHEL) 8.7, with 64-bit processors and NVIDIA A100 GPUs, though the approach can be adapted for other hardware configurations. Hopefully by the end of this post, we have learned how to exploit a many GPU set-up to classify huge amounts of text at the same time.

Set-up

For this tutorial let’s keep things organized and start a new project folder.

mkdir classification_task

cd classification_task

I have really started to enjoy using uv for package management, but you may also use pip. If you want to follow along, install uv with the following line

curl -LsSf https://astral.sh/uv/install.sh | sh

Next set up uv by running

uv init

uv venv

This will create the project structure and a virtual environment. You can activate it with:

source .venv/bin/activate

Installing packages to your enviornment is easy:

uv add [package_name]

#or if you prefer pip

uv pip install [package_name]

For now lets add some basic packages (pandas for data management, requests for pulling data and openai for interacting with our LLM server)

uv add pandas

uv add requests

Installing vLLM

We will be using the open-source package vLLM to handle the backend for running our Mistral model. We will focus on the python part to interact with the server. vLLM provides a great interface for users to run LLMs. The big advantage of vLLM is that it is a high performance engine, it allows us to parallize and send thousands of texts to many GPUs.

The easiest way to install vLLM is by using pip or uv (personally I am really enjoying using uv these days due to it’s speed and helpfulness in structuring research projects). On Linux, for example, simply run

# Install vLLM with CUDA 12.6.

pip install vllm # If you are using pip.

uv pip install vllm # If you are using uv.

from the terminal to download and install vLLM. This should take care of the set-up. If you are using a different version of CUDA check out the vLLM website to find the right installation for you.

Downloading the model weights

Next, we need to download the model weights for the Mistral model that we want to use. For this you need a huggingface account. Create one at huggingface.co if you dont have one already, then head over to https://huggingface.co/settings/tokens, generate and save a READ access token. Next, head to https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.3 and accept the licencing agreement to gain access to the Mistral-7B-Instruct model.

We are now almost ready to download the model. Run the following commands:

huggingface-cli login --token "your_token"

If asked whether you want to add the token to your git credentials you may choose “no”.

Next, download the models:

huggingface-cli download mistralai/Mistral-7B-Instruct-v0.3 --local-dir /path/to/your/models/mistral-7b-instruct

Inference in python

Great, with the model weights in place, we are now ready to write our python file to interact with vLLM to classify our texts.

Data

For this example, we will download some data from reddit and classify the comments of 4 users to see the sentiment of their posts. We will then for example be able to compare the sentiment with reddit’s up and downvotes. Let’s start by downloading the data: You can choose any users and grab the last 100 comments from http://www.reddit.com/user/{username}/comments/.json?limit=100. We will grab the posts of the two reddit CEOs that have public accounts (kn0thing and spez). Here is a quick snippet to download the comments and save them into a csv using python:

import time

import requests

import pandas as pd

columns = ['author', 'body', 'ups', 'downs', 'score']

for author in ["spez", "kn0thing"]:

url = f"http://www.reddit.com/user/{author}/comments/.json?limit=100"

response = requests.get(url)

while response.status_code != 200:

response = requests.get(url)

time.sleep(10)

data = response.json()

df = pd.DataFrame()

for comment in data['data']['children']:

df = pd.concat([df, pd.DataFrame([comment['data']])], ignore_index=True)

df = df[columns].copy()

df.to_csv(f"{author}.csv", index=False,quoting=2, sep=';')

Perfect, now that we have 100 posts of each of the CEOs, we can classify them using out LLM.

vLLM server

The trick to using multiple GPUs will be that we run multiple vLLM servers on multiple GPUs and send requests to the different GPUs from one central python instance.

So lets start the vLLM servers first (I will assume that you have at least 2 GPUs to run vLLM on, but even with one you can follow along)

CUDA_VISIBLE_DEVICES=0

vllm serve /path/to/your/models/mistral-7b-instruct \

--port 8000 \

--gpu-memory-utilization 0.9 \

--max-model-len 32768 \

--dtype auto \

--api-key "1234" \

--served-model-name "mistral" &

CUDA_VISIBLE_DEVICES=1

vllm serve /path/to/your/models/mistral-7b-instruct \

--port 8001 \

--gpu-memory-utilization 0.9 \

--max-model-len 32768 \

--dtype auto \

--api-key "1234" \

--served-model-name "mistral" &

This will start two vLLM servers, one on the first GPU (0) and port (8000) and one on GPU 1 with port 8001. Wait for the servers to be ready before continuing.

Scoring the posts

We will score each csv file separately on a different GPU. We will also use a separate CPU process to send the requests to the vLLM server using pythons multiprocessing. So lets write the function that will do the actual inference:

BASE_PROMPT = """

<instructions>

Consider the following comment. Please determine the sentiment of the text. Choose one of three labels: positive, negative, neutral.

<\instructions>

<format>

Reply only with the labelas a string.

<\format>

<comment>

<\comment>

"""

ALLOWED_LABELS = ['positive','negative','neutral']

MODEL_NAME = "mistral"

def infer(client, text):

prompt = BASE_PROMPT.replace("",text)

resp = client.chat.completions.create(

model=MODEL_NAME,

messages=[{"role": "user", "content": prompt}],

max_tokens=2,

temperature=0.0,

extra_body={"guided_choice": ALLOWED_LABELS},

)

return resp.choices[0].message.content.strip()

The infer function takes an OpenAI client and a comment as input. We then send a request to the chat completions endpoint, only allowing ALLOWED_LABELS as responses (guided_choice). Set the temperature to 0 for reproducability.

Next, we want to call this function from our workers:

import os

def worker(gpu_id,port,filepath):

os.environ["CUDA_VISIBLE_DEVICES"] = str(gpu_idx)

base_url = f"http://localhost:{port}"

client = OpenAI(base_url=f"{base_url}/v1", api_key="1234")

df = pd.read_csv(filepath,sep=";",quoting=2)

df['sentiment'] = df.apply(lambda x: infer(client,x['body']))

df.to_csv(filepath)

This worker function simply loads the csv file and applies the infer function to each row of the dataset. Finally it saves the results.

Let’s run the worker function for the two files:

from multiprocessing import Process

def main():

files = ["spez.csv","kn0thing.csv"]

procs = []

gpus = [0,1]

ports = [8000,8001]

for i in range(2):

p = Process(

target=worker,

args=(gpus[i],ports[i],files[i])

)

p.start()

procs.append(p)

for p in procs:

p.join()

Now we can run all by just calling main().

The results

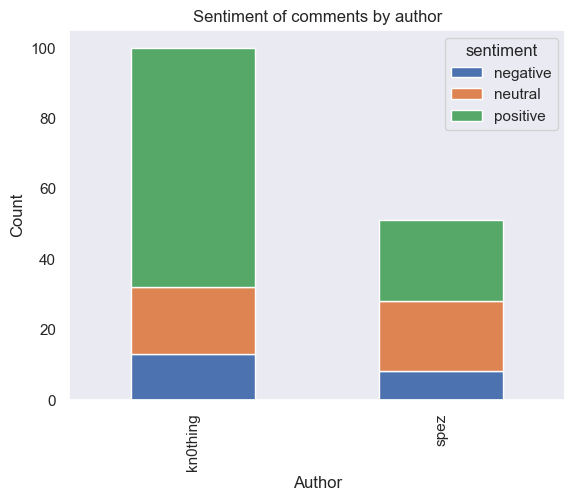

Let’s take a look at the results. Who was the more “positive” reddit CEO?

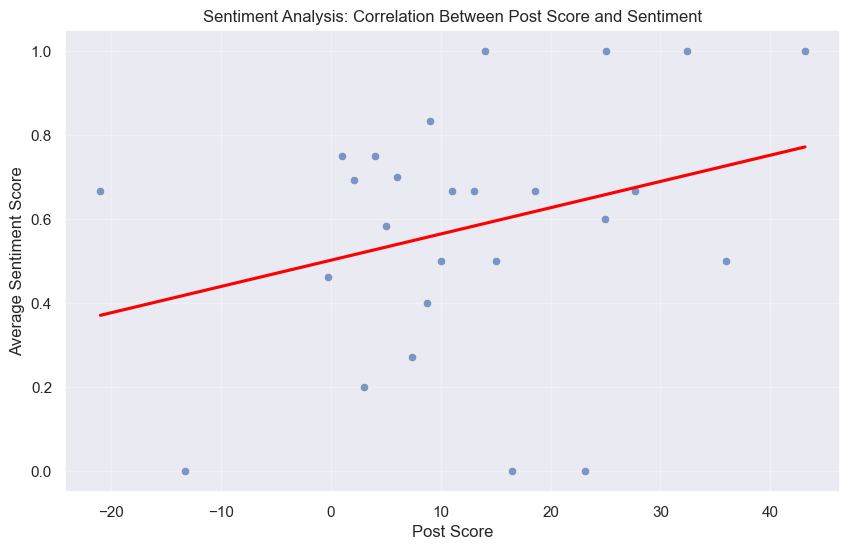

It seems that boh CEOs are mostly positive in the sentiment of their posts. We can also check how sentiment correlates with difference between the up and downvotes (score):

Intersting. The up and downvote score correlates with positive sentiment (at least for Reddit CEOs).