Working with documents of the German Bundestag

Drucksachen, Vorgänge, and the like

In 2022, I first started to work with parliamentary documents. While they are often freely available, it is a lot of effort to combine the raw data to perform sophisticated analysis. Some scholars have open-sourced datasets of public policy-making documents (Remschel and Kroeber, 2022)) but such datasets may not be kept up to date or lack the information needed in your analysis.

This is why I was extremely happy when I learned that the German Bundestag has created an API to allow for the automatic download of some of the public data. It gives full-text access to almost every written document. I then decided that it was time to write a python wrapper for this API. This is how BundestagsAPy came into existence.

BundestagsAPy – An example workflow

To understand how you can use BundestagsAPy, lets go through a simple example. Let’s say we want to understand how discussion in the Bundestag evolved during the covid-19 pandemic. To do so, we would like to access all debates during this time and analyze the language used. In this blog post, we will analyze all speeches between December 2019 and December 2021.

Setup

Let’s start by installing the required packages.

pip install bundestagsapy

pip install pandas

pip install seaborn

pip install matplotlib

Once everything is installed, we need to look up the current API key used by the Bundestag DIP API service. You can find it here. The current API key is I9FKdCn.hbfefNWCY336dL6x62vfwNKpoN2RZ1gp21 and valid until 2025, but be sure to check for yourself if this is still the up to date one. Now we can start downloading the speeches using BundestagsAPy.

Let’s start by importing all the required packages and setting up the API client.

import re

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import BundestagsAPy

api_key = 'I9FKdCn.hbfefNWCY336dL6x62vfwNKpoN2RZ1gp21'

client = BundestagsAPy.Client(api_key)

Downloading the data

Before you access the API, make sure you familiarize yourself a bit with the structure of the Bundestags DIP API, which you can do here. The document describes the different types of documents available. Since we are interested in speeches, we will have to use the “Plenarprotokoll”, which contains the speeches of parliamentarians. We can get all the meta information about the transcripts using the bt_plenarprotokoll method:

results = client.bt_plenarprotokoll_text(start_date='2020-01-01', end_date='2021-12-31',max_results=False)

results = [r for r in results if r.herausgeber =="BT"]

The method returns all meta information and texts of the transcripts between January 2020 and December 2020, the first full year with covid-19, however it does not only return the Bundestags’ transcripts but also the ones from the Bundesrat. The second line filters those.

Cleaning the speeches

Generally the text of the transcripts can now be accessed as such

text = results[0].text

print(text)

But the full transcript is quite long and typically contains a lot of meta information about the structure of the session. To ease the process, I have written a function that can clean the text and return a list of speakers and their speeches. TL/DR: The text is not well structured and requires a lot of cleaning.

def filter_stenography_part(text):

try:

start = re.search(r'\bSitzung\b.*?\beröffn|\beröffn*?\bSitzung\b', text, re.IGNORECASE | re.DOTALL).span()[0]

#start = re.search(r'Die Sitzung ist eröffnet.|Ich eröffne die Sitzung|Sitzung eröffnet',text).span()[0] #this is always in the first line of the opening of the session

except AttributeError:

start = re.search(r'Bitte nehmen Sie Platz.',text).span()[0] #sometimes the opening is not in the text, then we have to use this as a fallback

#second to last new line character before the end of the stenographic transcript

start = text[:start].rfind('\n',start-500,start)

start = text[:start].rfind('\n',start-500,start)+1

end = re.search(r'\(Schluss[\w\s]*: \d{1,2}.\d{1,2} Uhr\)',text).span()[0] #this is always the end of the stenographic transcript

return text[start:end]

def get_speech_positions(text):

#speeches always start with the name of the speaker and the party in brackets, e.g. Annalena Baerbock (BÜNDNIS 90/DIE GRÜNEN):

parties = [r'\(CDU/CSU\):\n',r'\(SPD\):\n',r'\(AfD\):\n',r'\(FDP\):\n',r'\(DIE LINKE\):\n',r'\(BÜNDNIS 90/DIE GRÜNEN\):\n']

end_pattern = re.compile(r'\nVizepräsident\w{0,2}\s[\w\s.-]+:\n|\nPräsident\w{0,2}\s[\w\s.-]+:\n')

speeches = {}

for party in parties:

pattern = re.compile(party)

starts = []

for m in re.finditer(pattern,text):

start = m.span()[0]

start = text[:start].rfind('\n',start-500,start)

starts.append(start)

#find end

spans = []

for s in starts:

end = re.search(end_pattern,text[s:]).span()[0]+s

spans.append((s,end))

party_text = party.replace(r'\(','').replace(r'\):','').replace(r'\n','').strip()

speeches[party_text] = spans

return speeches

def get_speeches(text,positions):

speeches = {}

for party in positions.keys():

party_speeches = []

for start,end in positions[party]:

party_speeches.append(text[start:end])

speeches[party] = party_speeches

return speeches

def clean_speech(speech):

speaker = speech[1:].split('\n')[0]

speech = speech.replace(speaker,'')

speaker = speaker.split('(')[0].strip()

#remove any statements not by the speaker (in paranthesis, e.g. applause)

speech = re.sub(r'\([^\)]+\)','',speech)

#replace newline characters

speech = speech.replace('\n',' ').strip()

return (speaker,speech)

def build_dataframe(speeches):

data = []

for party in speeches.keys():

for speaker,speech in speeches[party]:

data.append([party,speaker,speech])

return pd.DataFrame(data,columns=['party','speaker','speech'])

def clean_transcript(transcript):

text = transcript.text.replace("\xa0",' ')

text = filter_stenography_part(text)

positions = get_speech_positions(text)

speeches = get_speeches(text,positions)

clean_speeches = {}

for party in speeches.keys():

clean_speeches[party] = [clean_speech(s) for s in speeches[party]]

df = build_dataframe(clean_speeches)

df['date'] = transcript.datum

return df

Let’s go through this in detail (if you don’t care about the details, just copy the code and continue with the next code block).

The first step we need to do for every transcript is remove all the parts of the transcript that are not actually stenographic notes, but meta information. The function filter_stenography_part() does just this. It searches for the common opening lines in the opening of the sessions and the final note on the time of the closing of the session.

Next, we want to extract the parts of the remaining transcript that are actually speeches. get_speech_positions() finds the position of the speeches and returns the first and last position of the speech. The function is a bit complicated, but esentially looks for lines that look like this “Annalena Baerbock (BÜNDNIS 90/DIE GRÜNEN):”, after which a speech would start. It then looks for the first time a different speaker takes the microphone, which is always either the president or the vice president, signaling the end of the speech (or an interruption).

The function get_speeches() then extracts the text from the text and saves the results in a dictionary that contains the parties as keys and the speeches as a list. With clean_speech(), we can identify the speaker and remove all the interruptions from the transcript (interruptions are in parentheses). Finally, build_dataframe() returns a dataframe from the cleaned speeches. and clean_transcript() combines all the above so that we can run this function on every transcript from our results.

df = pd.DataFrame()

for t in results:

tmp_df = clean_transcript(t)

df = pd.concat([df,tmp_df],ignore_index=True)

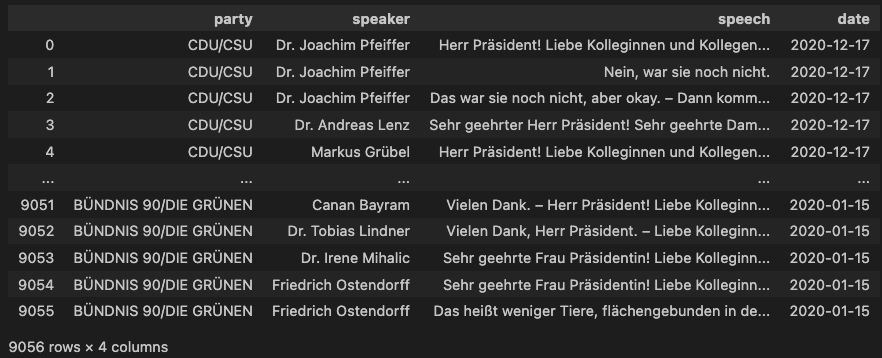

The resulting dataframe should look like the following.

Results

This was quite a bit of effort, but we are almost ready to create some graphs to understand how discussion in the Bundestag evolved during the pandemic. Let’s start by filtering for any words related to the virus and the measures to curb its spreading.

df['covid'] = df['speech'].str.contains(

r'virus|covid|corona|pandemie|epidemie|atemwegserkrankung',

regex=True,

flags=re.IGNORECASE

)

df['med_measures' ]= df['speech'].str.contains(

r'impf|maske|\bpcr|schnelltest',

regex=True,

flags=re.IGNORECASE

)

df['other_measures']= df['speech'].str.contains(

r'lockdown|ausgangssperr|hände.*?wasch.*?.|wasch.*?hände.*?.|desinfek|desinfi',

regex=True,

flags=re.IGNORECASE

)

Let’s also distinguish parties by their role at the time:

df['party_type'] = df['party'].replace(

{'CDU/CSU':'government',

'SPD':'government',

'AfD':'populists',

'FDP':'other opposition',

'DIE LINKE':'other opposition',

'BÜNDNIS 90/DIE GRÜNEN':'other opposition'})

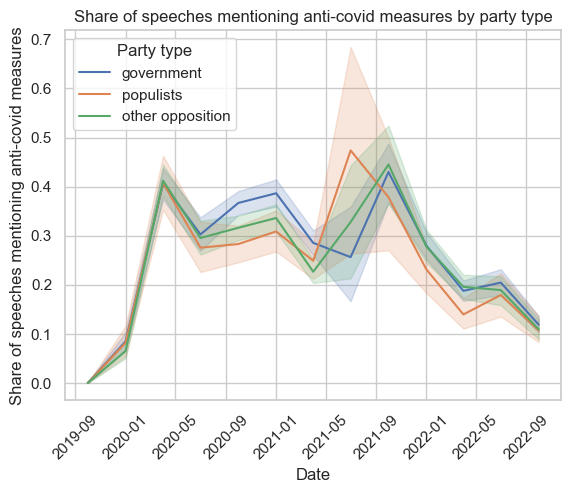

Now we are ready to create a plot the speeches over time:

df['date']= pd.to_datetime(df['date'])

df['mdate'] = df['date'].dt.to_period('Q').dt.to_timestamp()

sns.lineplot(data=df,x='mdate',y='covid',hue='party_type')

plt.xlabel('Date')

plt.ylabel('Share of speeches mentioning covid')

plt.title('Share of speeches mentioning covid by party type')

plt.legend(title='Party type')

plt.xticks(rotation=45)

plt.show()

As we can see, it seems that right after the arrival of the pandemic in Germany, all parties started to talk a lot about covid; it was by far the dominant topic, with between 40% and 50% of speeches mentioning the virus.

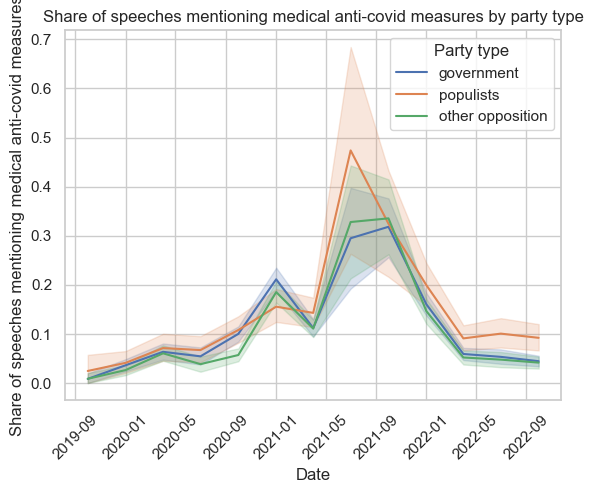

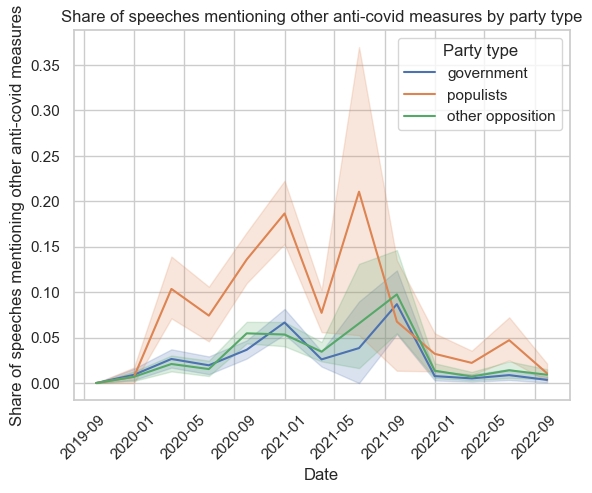

Let’s look at the discussion of measures as well:

#medical measures

sns.lineplot(data=df,x='mdate',y='med_measures',hue='party_type')

plt.xlabel('Date')

plt.ylabel('Share of speeches mentioning medical anti-covid measures')

plt.title('Share of speeches mentioning medical anti-covid measures by party type')

plt.legend(title='Party type')

plt.xticks(rotation=45)

plt.show()

#other measures

sns.lineplot(data=df,x='mdate',y='other_measures',hue='party_type')

plt.xlabel('Date')

plt.ylabel('Share of speeches mentioning other anti-covid measures')

plt.title('Share of speeches mentioning other anti-covid measures by party type')

plt.legend(title='Party type')

plt.xticks(rotation=45)

plt.show()

The figures show that all parties discussed medical measures to a similar degree and discussion increased as the vaccine became available. But interestingly, the same is not true for conventional measures; here populists seemed to pay much more attention. Of course, mentioning the measures could be associated with critique; therefore, in the next step, you could look at bi-grams or a sentiment analysis of the speeches. But let’s leave this for another post.

Jupyter Notebook

You can find the notebook for this post here.